Sunday, May 31, 2020

S3 Service

Friday, May 29, 2020

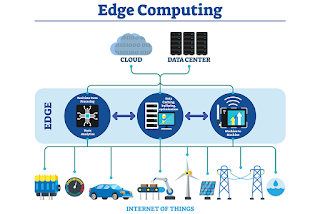

Edge Computing

Edge

computing is a networking philosophy focused on bringing computing as close to

the source of data as possible in order to reduce latency and bandwidth use.

In

simpler terms, edge computing means running fewer processes in the cloud and

moving those processes to local places, such as on a user’s computer, an IoT device,

or an edge server.

Bringing computation to the network’s edge minimizes the amount of

long-distance communication that has to happen between a client and server.

The

increase of IoT devices at the edge of the network is producing a massive

amount of data to be computed at data centers, pushing network bandwidth

requirements to the limit.Despite the improvements of network technology, data

centers cannot guarantee acceptable transfer rates and response times, which

could be a critical requirement for many applications. Furthermore, devices at

the edge constantly consume data coming from the cloud, forcing companies to

build content delivery networks to decentralize data and service provisioning,

leveraging physical proximity to the end user.

In a similar way, the aim of Edge Computing is to move the computation away from data centers towards the edge of the network, exploiting smart objects, mobile phones or network gateways to perform tasks and provide services on behalf of the cloud.

By moving services to the edge, it

is possible to provide content caching, service delivery, storage and IoT

management resulting in better response times and transfer rates

Thursday, May 28, 2020

Docker Volumes

A Docker image is a collection of read-only layers. When you

launch a container from an image, Docker adds a read-write layer to the top of

that stack of read-only layers. Docker calls this the Union File System.

Any time a file is changed, Docker makes a copy of the file from

the read-only layers up into the top read-write layer. This leaves the original

(read-only) file unchanged.

When a container is deleted,

that top read-write layer is lost. This means that any changes made after the

container was launched are now gone.

Mounting a volume is a good solution if you want to:

· Push

data to a container.

· Pull

data from a container.

· Share data between

containers.

A Docker volume "lives" outside the container, on the

host machine.

From the container, the volume acts like a folder which you can

use to store and retrieve data. It is simply a mount point to a directory on

the host.

To create a volume, use the command:

sudo docker volume create

--name [volume name]

List Volumes

To list all Docker volumes on the system, use the command:

sudo docker volume ls

This will return a list of all of the Docker volumes which have

been created on the host.

Inspect a Volume

To inspect a named volume, use the command:

sudo docker volume inspect

[volume name]

To remove a named volume, use the command:

sudo docker volume rm [volume

name]

Monday, May 25, 2020

What is Ansible

- Ansible is free.

- Ansible is very consistent and lightweight, and no constraints regarding the operating system or underlying hardware are present.

- It is very secure due to its agentless capabilities and open SSH security features.

- No need of any special system administrator skills to install and use it.

- Its modularity regarding plugins, inventories, modules, and playbooks make Ansible perfect companion orchestrate large environments.

Sunday, May 24, 2020

Container Orchestration

Chaos Monkey

Saturday, May 23, 2020

Apache Kafka- Docker Installation

Major Challenges in MicroServices

These are the ten major challenges of Microservices architecture and proposed solutions:

-

Azure DevOps is also known as Microsoft visual studio team services (VSTS) is a set of collaborative development tools built for the cloud...

-

It is a tool for developers to develop, release, and operate production-ready containerized applications on Amazon ECS. From getting starte...

-

Confluent Hub is an online repository for extensions and components for Kafka. Kafka is based on extensible model for many of its services. ...