Friday, June 26, 2020

Aws HoneyCode

Docker on 32-bit Windows

- Download choco from below link:https://chocolatey.org/docs/installation

- Once installed,issue below steps.

- choco install docker-machine -y

- docker-machine create --driver virtualbox default

- docker-machine env | Invoke-Expression

Wednesday, June 24, 2020

Ansible Tower

- Role-based access control: you can set up teams and users in various roles. These can integrate with your existing LDAP or AD environment.

- Job scheduling: schedule your jobs and set repetition options

- Portal mode: this is a simplified view of automation jobs for newbies and less experienced Ansible users. This is an excellent feature as it truly lowers the entry barriers to starting to use Ansible.

- Fully documented REST API: allows you to integrate Asible into your existing toolset and environment

- Tower Dashboard: use this to quickly view a summary of your entire environment. Simplifies things for sysadmins while sipping their coffee.

- Cloud integration: Tower is compatible with the major cloud environments: Amazon EC2, Rackspace, Azure.

- Red Hat Enterprise Linux 6 64-bit

- Red Hat Enterprise Linux 7 64-bit

- CentOS 6 64-bit

- CentOS 7 64-bit

- Ubuntu 12.04 LTS 64-bit

- Ubuntu 14.04 LTS 64-bit

- Ubuntu 16.04 LTS 64 bit

- 64-bit support required (kernel and runtime) and 20 GB hard disk.

- Minimum 2 GB RAM (4+ GB RAM recommended) is required.

- 2 GB RAM (minimum and recommended for Vagrant trial installations

- 4 GB RAM is recommended /100 forks

Tuesday, June 23, 2020

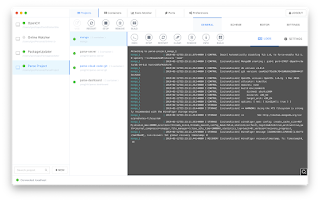

Top GUI's for Docker

- Requirements:

- Go version >= 1.8

- Docker >= 1.13 (API >= 1.25)

- Docker-Compose >= 1.23.2 (optional)

Monday, June 22, 2020

Netflix Eureka Server

- Services have no prior knowledge about the physical location of other Service Instances

- Services advertise their existence and disappearance

- Services are able to find instances of another Service based on advertised metadata

- Instance failures are detected and they become invalid discovery results

- Server Cluster :Production setup includes a cluster of Eureka Servers,

- Client Side Caching.

Sunday, June 21, 2020

Install Jenkins Plugins

- Using the "Plugin Manager" in the web UI.

- Using the Jenkins CLI install-plugin command.

Docker on AWS

- Amazon Elastic Container Service with AWS Fargate

- AWS Ec2 Instance

- Amazon Elastic Container Service for Kubernetes

- AWS Elastic BeanStack with Single Conatiner Docker

Thursday, June 18, 2020

Java14 Features

JAVA 14 addresses a total of 16 main enhancements/changes (JEPs) ranging from the Java language support to the latest APIs for ongoing JDK flight recorder monitoring.

- Pattern Matching for instanceof (Preview)

- Non-Volatile Mapped Byte Buffers (Incubator)

- Helpful NullPointerExceptions

- Switch Expressions (Standard)

- Packaging Tool (Incubator)

- NUMA-Aware Memory Allocation for G1

- JFR Event Streaming

- Records (Preview)

- Deprecate the Solaris and SPARC Ports

- Remove the Concurrent Mark Sweep (CMS) Garbage Collector

- ZGC on macOS (experimental)

- ZGC on Windows (experimental)

- Deprecate the ParallelScavenge + SerialOld GC Combination

- Remove the Pack200 Tools and API

- Text Blocks (Second Preview)

- Foreign-Memory Access API (Incubator)

Java11 Features

JDK11 Features and Changes.

Important Changes:

- The deployment stack, required for Applets and Web Start Applications, was deprecated in JDK 9 and has been removed in JDK 11.

- In Windows and macOS, installing the JDK in previous releases optionally installed a JRE. In JDK 11, this is no longer an option.

- In this release, the JRE or Server JRE is no longer offered. Only the JDK is offered. Users can use jlink to create smaller custom runtimes.

- JavaFX is no longer included in the JDK. It is now available as a separate download from openjfx.io.

- Java Mission Control, which was shipped in JDK 7, 8, 9, and 10, is no longer included with the Oracle JDK. It is now a separate download.

- Updated packaging format for Windows has changed from tar.gz to .zip.

- Updated package format for macOS has changed from .app to .dmg.

Features:

1)Local-Variable Syntax for Lambda Parameters:

IntFunction<Integer> d1 = (int x) -> x * 2; // Valid

IntFunction<Integer> d2 = (var x) -> x * 3; // Generets Error

The second line won’t compile in Java 10, but will compile in Java 11.

But, why do we need that at all? If we can write it like this:

IntFunction<Integer> d1 = x -> x * 3;

2) String::lines

Another new feature in Java 11 is the ‘String::lines’ which helps in streaming lines.This is ideal for situations where you have a multiline string.

var str = "This\r\n is \r\nSrinivas";

str.lines()

// we now have a `Stream<String>`

.map(line -> "// " + line)

.forEach(System.out::println);

// OUTPUT:

// This

// is

// Srinivas

3) toArray(IntFunction) Default Method:

After the Java 11 release date, another new feature in Java 11 also comes to the front. The new feature is the .toArray(IntFunction) default method, which is now a part of the ‘java.util.Collection’ interface. The method helps in transferring elements in the collection to a newly created array having specific runtime type. You can assume it as an overload of the toArray (T[ ]) method used for taking array instance as an argument.

4) Epsilon Garbage Collector:

The addition of JEP 318 Epsilon to the top Java 11 features is also another notable highlight. The No-Op garbage collector is ideal for handling only memory allocation without implementing any memory reclamation apparatus. Epsilon GC is also helpful for cost-benefit comparison of other garbage collectors and performance testing.

5) Improved KeyStore Mechanisms:

Security precedents for Java 12 features can take inspiration from Java 11. The new and improved KeyStore mechanisms in Java 11 can surely provide a valid proof for that. You can find a new security property with the name ‘jceks.key.serialFilter’ in Java 11.

JCEKS KeyStore users this security filter at the time of deserialization of encrypted key object housed in a SecretKeyEntry. Without any configuration, the filter result renders an UNDECIDED value and obtains default configuration by ‘jdk.serialFilter.’

6) Z Garbage Collector:

One of the crucial new entries in top Java 11 features is the ZGC or Z garbage collector. It is a scalable low-latency garbage collected ideal for addressing specific objectives. The Z garbage collector ensures that pause times do not go beyond 10ms. It also ensures that pause times do not increase with the size of the heap or live-set. Finally, ZGC also manages heaps of varying sizes from 100 megabytes to multi terabytes.

7) Dynamic Allocation of Compiler Threads:

Dynamic control of compiler threads is possible now in Java 11 with a new command line flag. The command-line flag is ‘-XX: +UseDynamicNumberOfCompilerThreads.’ The VM starts numerous compiler threads on systems with multiple CPUs in the tiered compilation mode. There is no concern for the number of compilation requests or available memory with this command line flag.

8) New File Methods:

New file methods among Java 11 features are also prominent attractions in the new Java release. The new file methods include ‘writeString()’, ‘readString()’ and ‘isSameFile()’. ‘writeString()’ is ideal for writing some content in a file while ‘readString()’ is ideal for reading contents in a file.

9) isBlank():

This is a boolean method. It just returns true when a string is empty and vice-versa.

class Blog {

public static void main(String args[])

{ String str1 = "";

System.out.println(str1.isBlank());

String str2 = "SriniBlog";

System.out.println(str2.isBlank());

}}

10) lines(): This method is to return a collection of strings which are divided by line terminators.

class Blog {

public static void main(String args[])

{

String str = "Blog\nFor\nSrini";

System.out.println(str

.lines()

.collect(

Collectors.toList()));

}}

11) Removal of thread functions: stop(Throwable obj) and destroy() objects have been removed from the JDK 11 because they only throw UnSupportedOperation and NoSuchMethodError respectively. Other than that, they were of no use.

12) Local-Variable Syntax for Lambda Parameters: JDK 11 allows ‘var’ to be used in lambda expressions. This was introduced to be consistent with local ‘var’ syntax of Java 10.

//Variable used in lambda expression

public class LambdaExample {

public static void main(String[] args) {

IntStream.of(1, 2, 3, 5, 6, 7)

.filter((var i) -> i % 2 == 0)

.forEach(System.out::println);

}}

13) Pattern recognizing methods:

asMatchPredicate():- This method is similar to Java 8 method asPredicate(). Introduced in JDK 11, this method will create a predicate if pattern matches with input string.

jshell>var str = Pattern.compile("aa").asMatchPredicate();

jshell>str.test(aabb);

Output: false

jshell>str.test(aa);

Output: true

Removed Features and Options

1.Removal of com.sun.awt.AWTUtilities Class

2.Removal of Lucida Fonts from Oracle JDK

3.Removal of appletviewer Launcher

4.Oracle JDK’s javax.imageio JPEG Plugin No Longer Supports Images with alpha

5.Removal of sun.misc.Unsafe.defineClass

6.Removal of Thread.destroy() and Thread.stop(Throwable) Methods

7.Removal of sun.nio.ch.disableSystemWideOverlappingFileLockCheck Property

8.Removal of sun.locale.formatasdefault Property

9. Removal of JVM-MANAGEMENT-MIB.mib

10.Removal of SNMP Agent

11.Removal of Java Deployment Technologies

12.Removal of JMC from the Oracle JDK

13.Removal of JavaFX from the Oracle JDK

14.JEP 320 Remove the Java EE and CORBA Modules

Man-in-The-Middle Attack

- Man-in-the-middle is a type of eavesdropping attack that occurs when a malicious actor inserts himself as a relay/proxy into a communication session between people or systems.

- A MITM attack exploits the real-time processing of transactions, conversations or transfer of other data.

- Man-in-the-middle attacks allow attackers to intercept, send and receive data never meant to be for them without either outside party knowing until it is too late.

- SQLMap

- Air Crack-Ng

- ncrack

- SSl Strip

- EtterCap

- MetaSploit framework

Monday, June 15, 2020

Greenfield and Brownfield

Greenfield Software

Development :

Greenfield software development refers to developing a system for a totally new environment and requires development from a clean slate – no legacy code around. It is an approach used when you’re starting a fresh, and with no restrictions or dependencies.

A pure greenfield project is quite rare these days, you frequently end up interacting or updating some amount of existing code or enabling integrations.

Some examples of greenfield software development include: building a website or app from scratch, setting up a new data center, or even implementing a new rules engine.

• Gives an opportunity to implement a state-of-the-art technology solution from scratch

• Provides a clean slate

for software development

• No compulsion to work

within the constraints of existing systems or infrastructure

•

No dependencies or ties to existing software, preconceived notions, or existing

business processes

• Since all aspects of the

new system need to be defined, it can be quite time consuming

• With so many possible

development options, there may be no clear understanding of the approach to

take

•

It may be hard to get everyone involved to make critical decisions in a decent

time frame

Brownfield Software Development:

Brownfield software development refers to the development and deployment of a new software system in the presence of existing or legacy software systems.

Brownfield development usually happens when you want to develop or improve upon an existing application, and compels you to work with previously created code.

Therefore, any new software architecture must consider and coexist with systems already in place – so as to enhance existing functionality or capability.

Examples of brownfield software development include: adding a new

module to an existing enterprise system, integrating a new feature to software

that was developed earlier, or upgrading code to enhance functionality of an

app.

Advantages:

• Offers a place to start

with a predetermined direction.

• Gives a chance to add

improvements to existing technology solutions.

• Supports working with

defined business processes and technology solutions.

•

Allows existing code to be reused to add new features.

Disadvantages:

• Requires thorough

knowledge of existing systems, services, and data on which the new system needs

to be built.

• There may be a need to

re-engineer a large portion of the existing complex environment so that they

make operational sense to the new business requirement

• Requires detailed and

precise understanding of the constraints of the existing business and IT, so

the new project does not fail.

• Dealing with legacy code can not only slow down the development process but also add to overall development costs.

Refer: http://skolaparthi.com/

Friday, June 12, 2020

Kubectl Commands

$ kubectl delete cronjob <cronjob name>

Saturday, June 6, 2020

Design Patterns in Microservices

Microservices is a distinctive method of developing software systems that try to focus on building single-function modules with well-defined interfaces and operations.

Microservices have many benefits for Agile and DevOps teams.

The goal of microservices is to increase the velocity of application releases, by decomposing the application into small autonomous services that can be deployed independently. A microservices architecture also brings some challenges.

The design patterns shown here can help mitigate these challenges.

Below are the Micro-services Design Patterns:

Decomposition Patterns:

- Decompose by Business Capability

- Decompose by Subdomain

- Decompose by Transactions

- Strangler Patterns

- Bulkhead Pattern

- Sidecar Pattern

Integration Patterns:

- API Gateway pattern

- Aggregator Pattern

- Proxy Pattern

- Gateway Routing Pattern

- Chained Microservice Pattern

- Branch Pattern

- Client-Side UI Composition Pattern

Database Pattern:

- Database per Service

- Shared Database Per Service

- CQRS

- Event Sourcing

- Saga Pattern

Observability Patterns:

- Log Aggregation

- Performance Metrics

- Distributed Tracing

- Health Check

Cross-Cutting Patterns:

- External Configuration

- Service Discovery Pattern

- Circuit Breaker Pattern

- Blue-Green Deployment Pattern

Monday, June 1, 2020

Docker Swarm

Docker Swarm is a group of either physical or virtual machines that are running the Docker application and that have been configured to join together in a cluster. Once a group of machines have been clustered together, you can still run the Docker commands that you're used to, but they will now be carried out by the machines in your cluster.

The activities of the cluster are controlled by a

swarm manager, and machines that have joined the cluster are referred to as

nodes.

Docker swarm is a container orchestration tool,

meaning that it allows the user to manage multiple containers deployed across

multiple host machines.

The cluster management and orchestration features

embedded in the Docker Engine are built using Swarmkit.

A Node is an instance of the Docker engine participating in the

swarm.

Worker Nodes receive and execute tasks dispatched from manager nodes.

By default manager nodes also run services as worker nodes, but you can

configure them to run manager tasks exclusively and be manager-only nodes.

Manager nodes perform the orchestration and cluster management functions required to maintain the desired state of the swarm.

The swarm manager can automatically assign the service a PublishedPort or you can configure a PublishedPort for the service.

A Service is the definition of

tasks to execute on the manager or worker nodes. It is the central structure of

the swarm system and the primary root of user interaction with the swarm.

Node Management:

- Initialize

a swarm: docker swarm init

- List

swarm nodes: docker node ls

Activate

a node (after maintenance):

docker node update

--availability active node_name

Service

management:

- List

services (manager node): docker service ls

- Describe

services (manager node): docker service ps service_name

- Inspect

a service: docker service inspect service_name

- Scale a

service: docker service scale service_name=N

- Remove

service: docker service rm service_name

Stack

management

- Deploy

stack from docker-compose file: docker stack deploy -c

docker-compose.yml stack_name

- List

stacks: docker stack ls

- List

stack services: docker stack services stack_name

- List

stack tasks: docker stack ps stack_name

- Remove

stack : docker stack rm stack_name

Network

management:

- List

networks: docker network ls

- Create

overlay network: docker network create -d overlay network_name

- Remove

network: docker network rm network_name

Monitor

services

- Docker

stats: docker stats

- Service logs: docker service logs service_name

AWS Disaster Recovery

-

Azure DevOps is also known as Microsoft visual studio team services (VSTS) is a set of collaborative development tools built for the cloud...

-

It is a tool for developers to develop, release, and operate production-ready containerized applications on Amazon ECS. From getting starte...

-

Confluent Hub is an online repository for extensions and components for Kafka. Kafka is based on extensible model for many of its services. ...